Innovations / Solutions / Applications / Cloud-Native

AgileGuru Engineering blog on innovative solutions and technical excellence by engineers and architects.

Start Small, Expand Later for optimization and control.

Guru Raghupathy, 01 January 2025

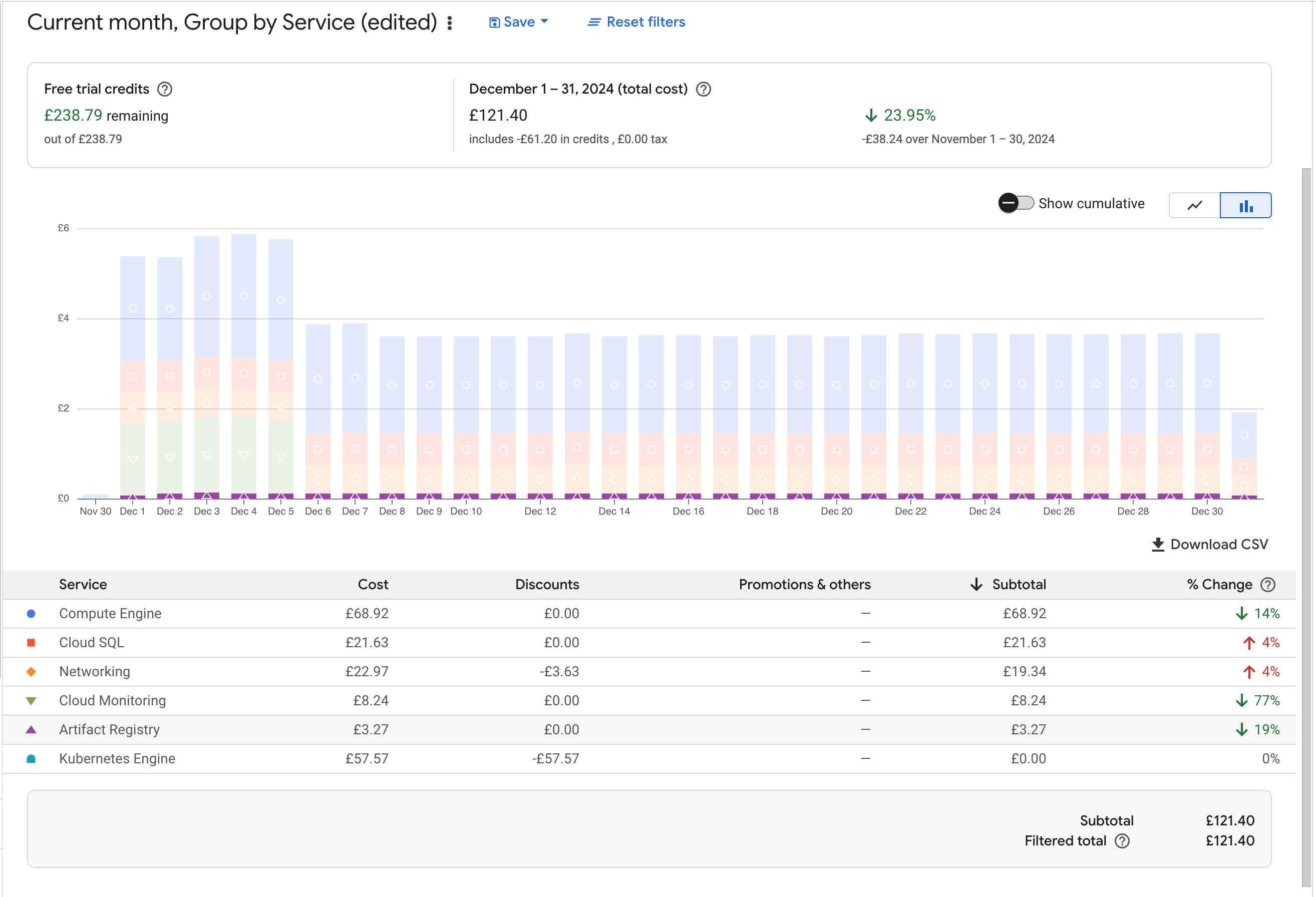

With cloud providing unlimited resources, we may end up with a huge bill if not careful. A good approach is to start very small and expand slowly based on metrics rather than upfront provisioning. You can use tools like terraform, grafana for this purpose. Let's Explore why is this the case and how you can do this using Terraform, GKE, Grafana with GCP as an example.

Assumptions

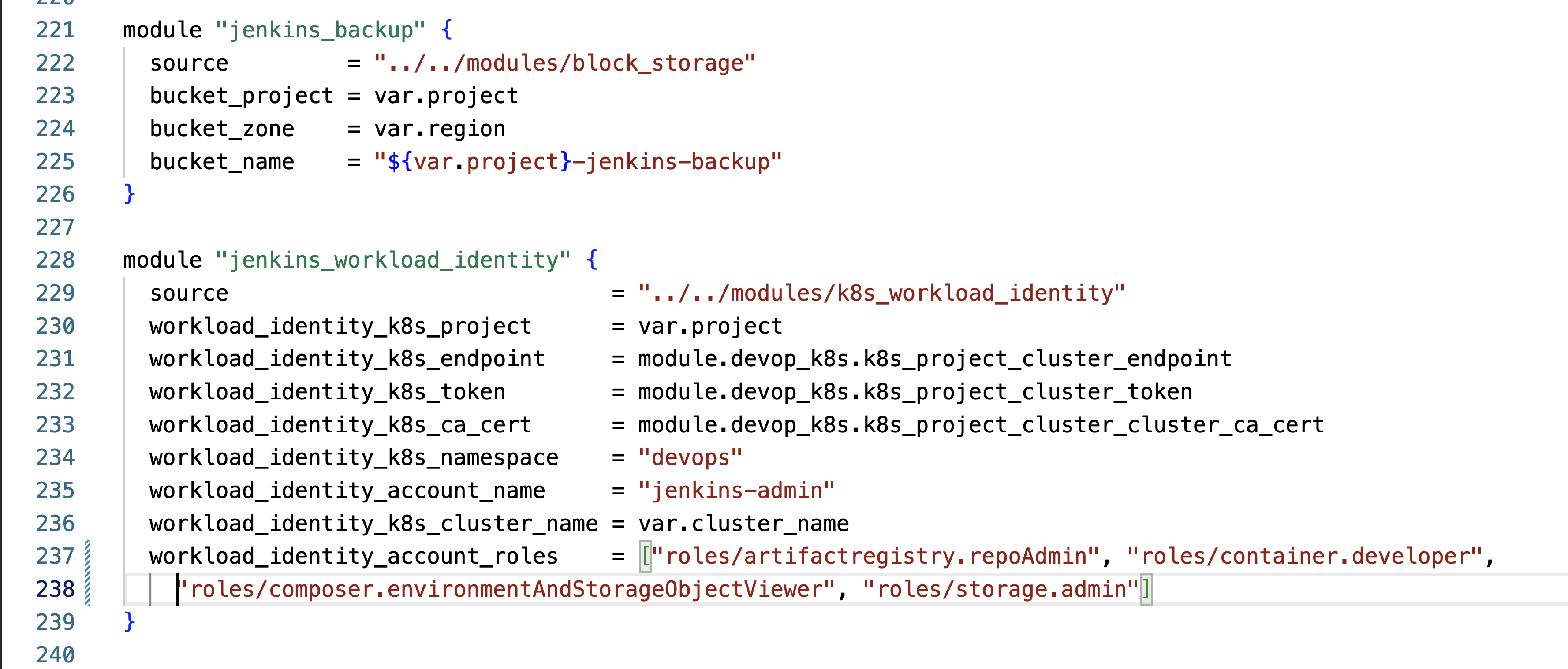

To ensure that you are able to track the changes you need to use tools like terraform and grafana. These should be version controller in git for review and deployment. In our example we are using GKE with external persistence disk and GCS bucket for storage. We will be using Network Load Balancer for our application. We will be using artifact repository for application artifacts and docker images.

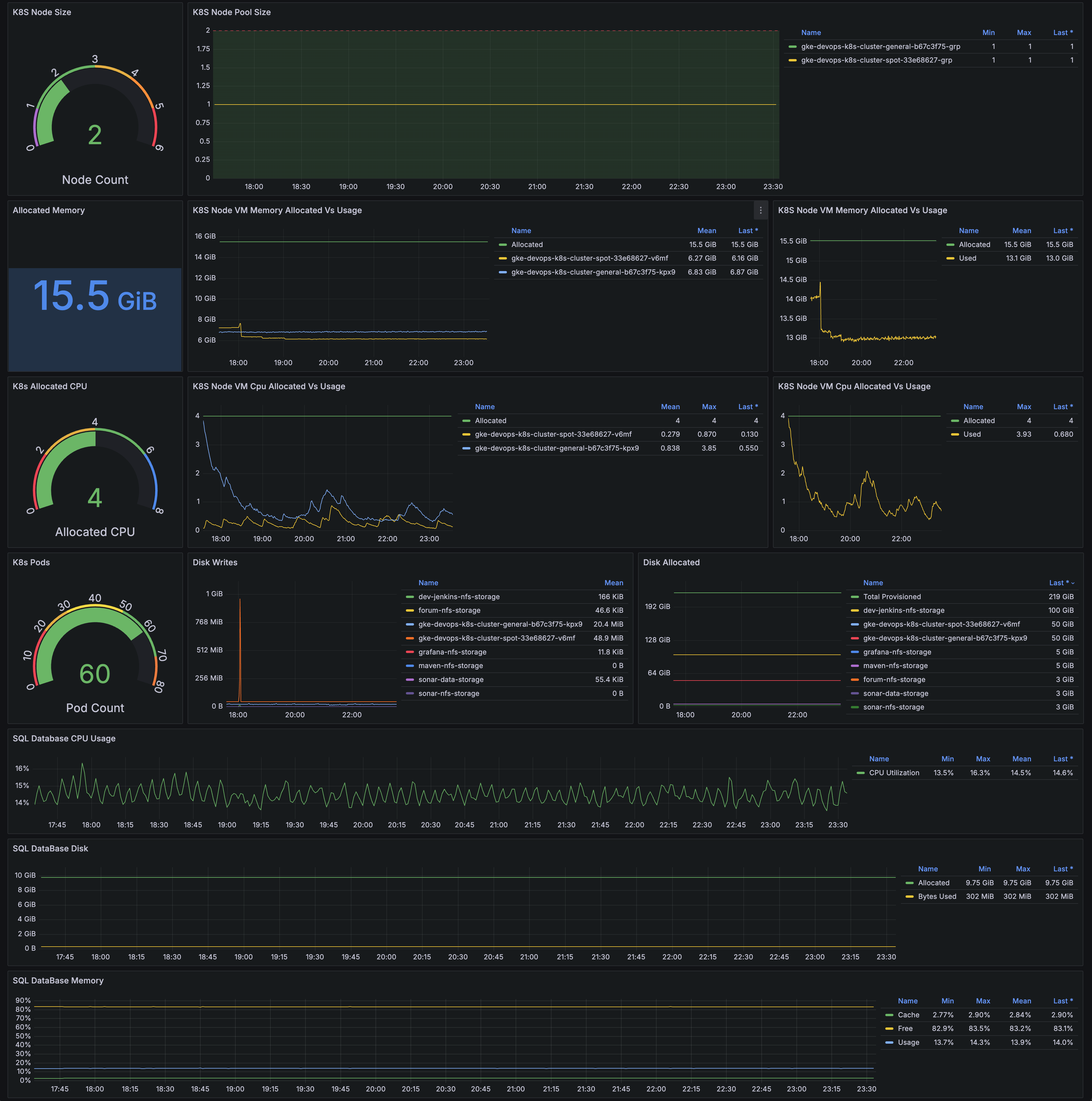

Sample Outcome. Observe the 23.95% saving without any negative impact.

Guides & Steps

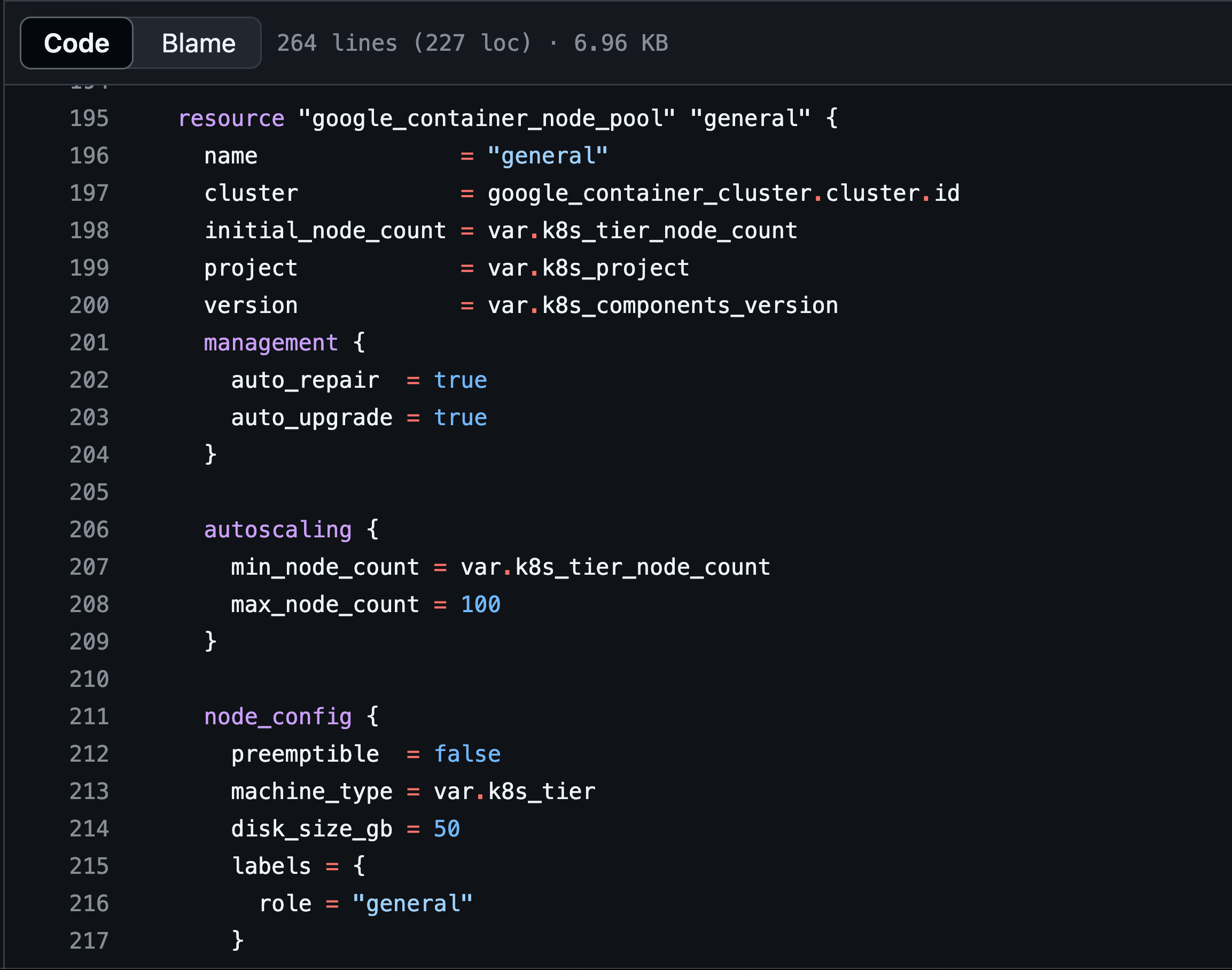

Local Disk and Node Provisioning

- The default disk size of each node in the GKE cluster is 100GB. If the node and pods are few then reduce it using

- disk_size_gb = 50

- min_node_count = 1

- max_node_count = 100

- By default the provisioning model is non-preemtible. If your workloads are fault tolerant then use preemtible / spot instances.

- preemptible = true

- disk_size_gb = 50

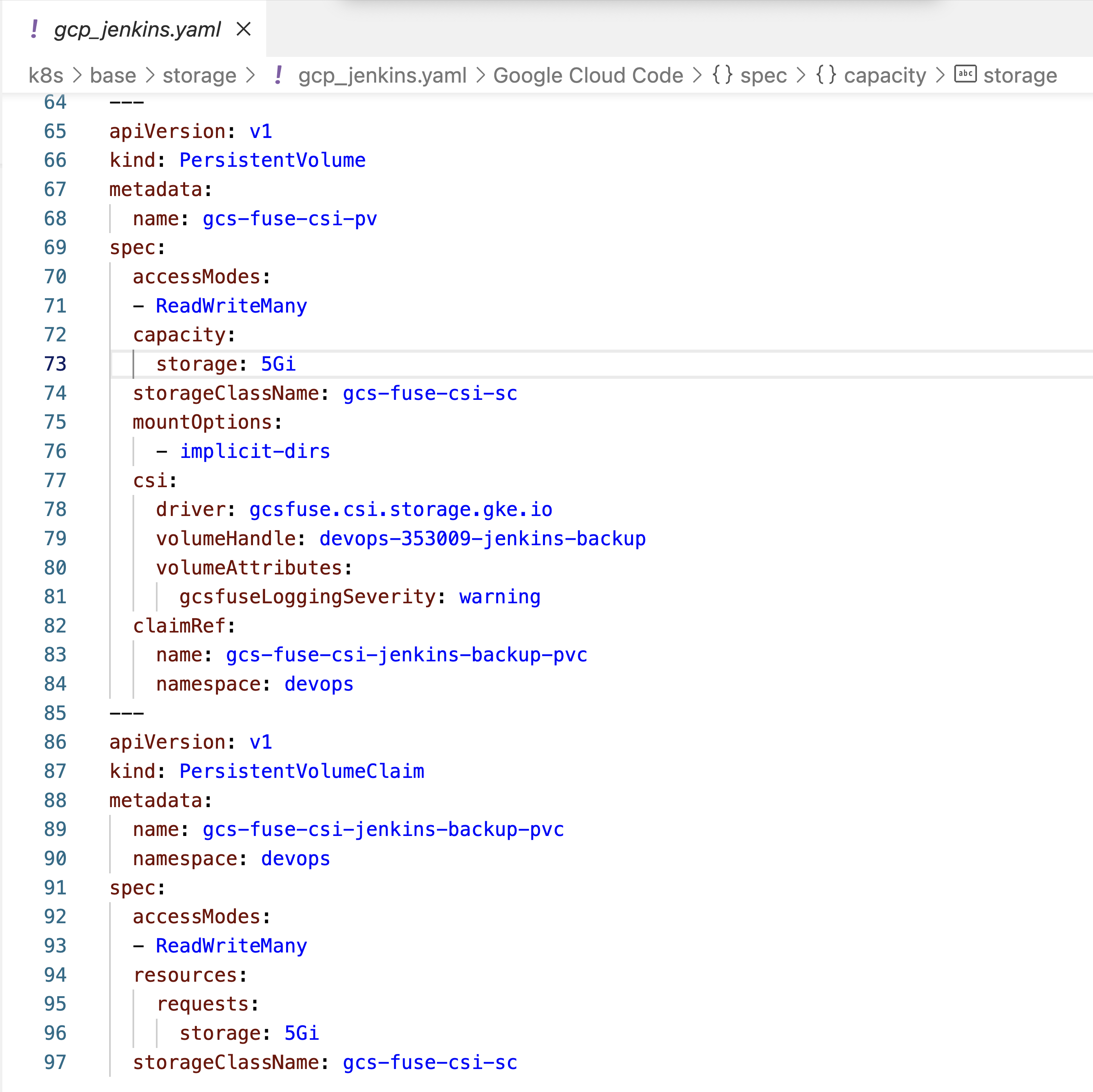

Remote Disks Using Persistent Volumes with GCS Bucket

- If your application does not need high performance disk like SSD then using cloud storage buckets as a disk using fuse driver is a good option.

Use Internal Network and Inter Zonal Network in same region

- Cloud pricing for public and inter regional traffic.

- Use Private IP or Private Google Access.

Use Regional Vendor Provided Artifact Storage for application artifacts and container images

- Using Regional artifact storage will reduce significant Egress and Ingress charges.

- You only pay for what you use without the worry of managing storage ( under or over provisioned )

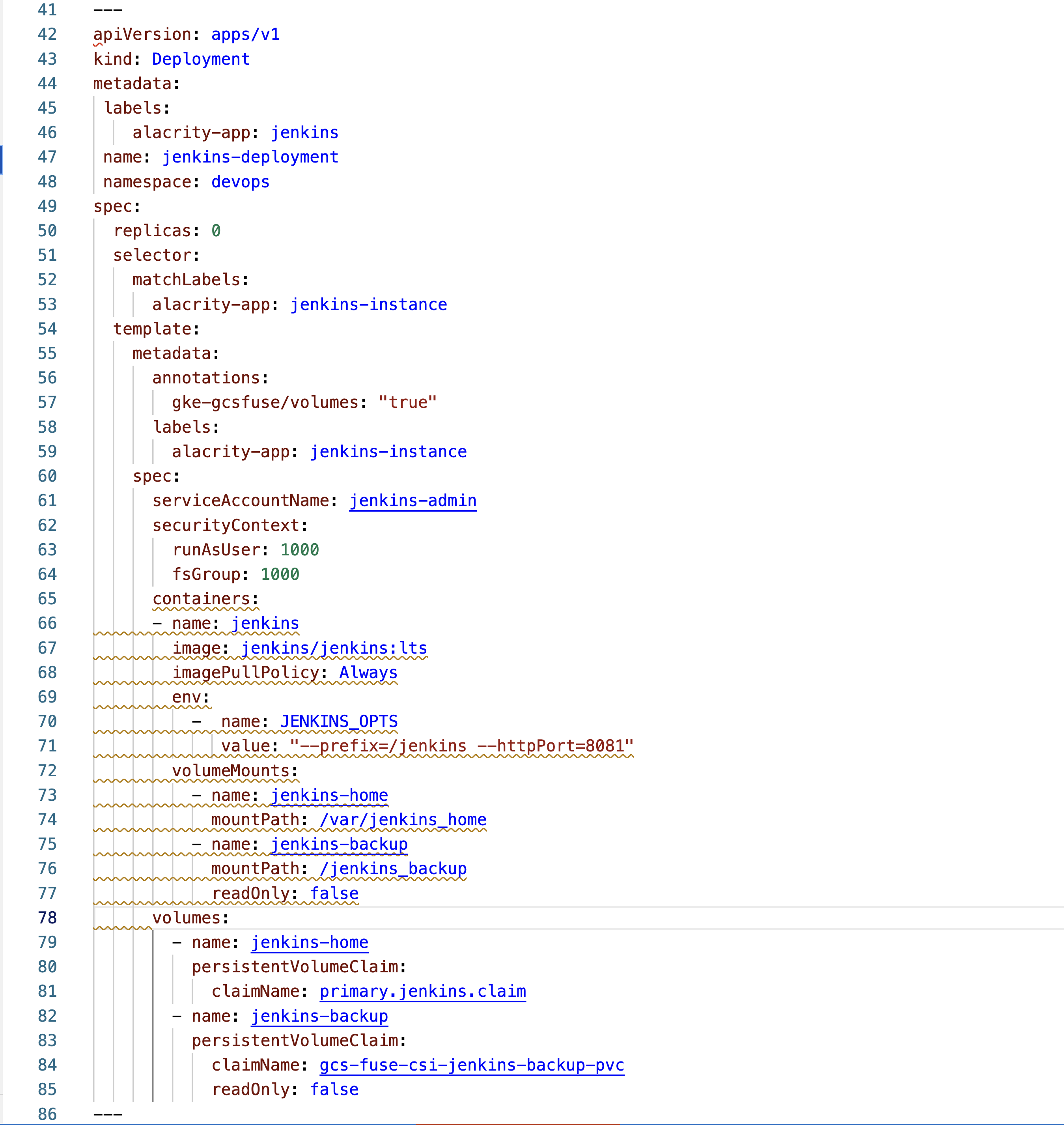

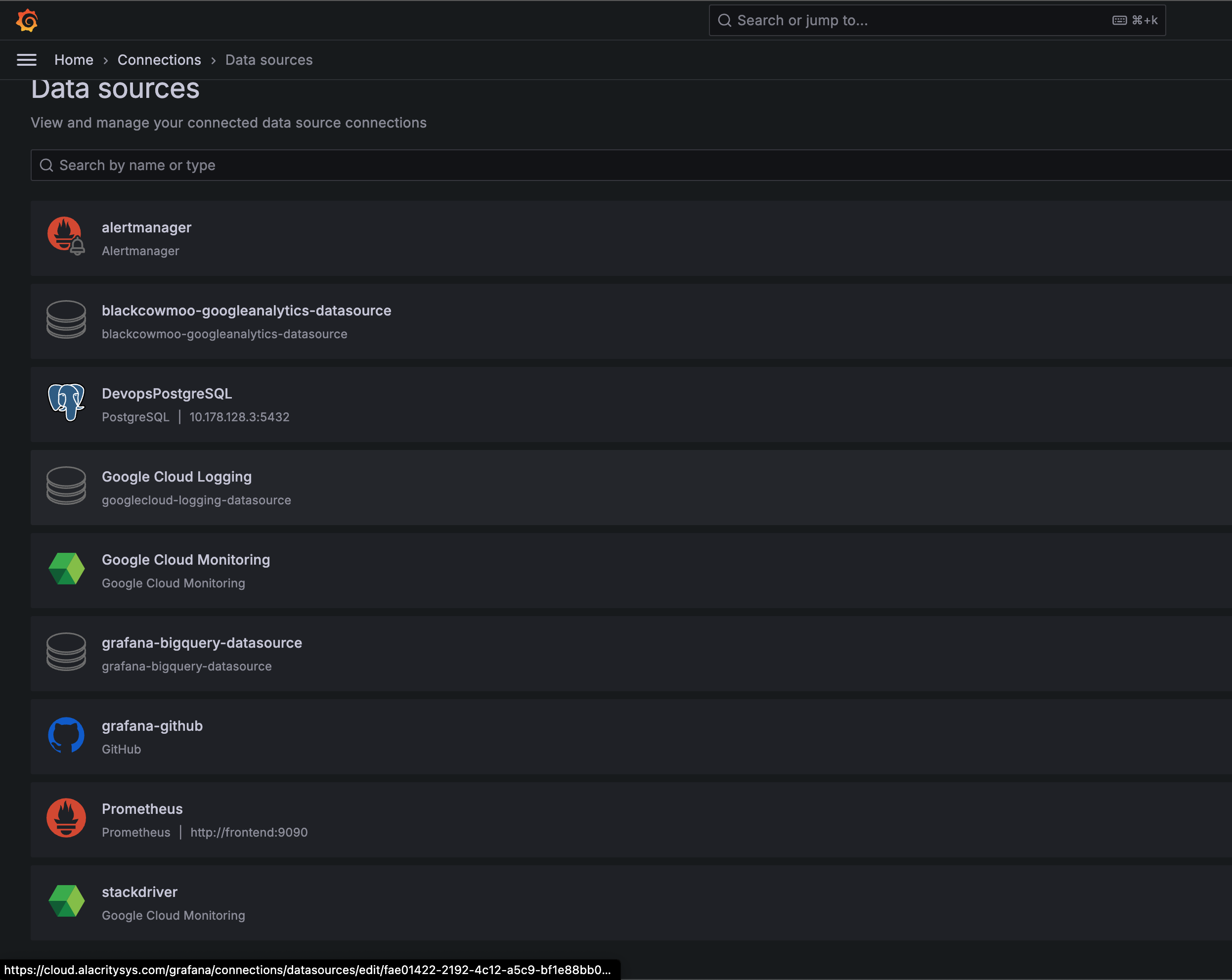

Use Vendor Provided Monitoring or install grafana monitoring stack

- Use Vendor specific data-sources without installing your own. Vendor provided data-source are very cheap indeed.

- Create dashboard with thresholds and show dashboards for CPU / MEMORY / DISK / NETWORK with insights on spare capacity.

External Resources and Code Repositories

- Install GKE cluster Using Terraform : https://github.com/agileguru/gke_ssl_Iac_kcert

- Install EKS cluster Using EKSCTL : https://github.com/agileguru/eks

- Sample Cloud Vendor metrics and dashboards in grafana : https://cloud.alacritysys.com/grafana/

Related Articles

Conclusion

Leveraging internal networks, GCS bucket with FUSE filesystem integration for GKE clusters, and Grafana dashboards creates a powerful cost optimization strategy for cloud infrastructure. By utilizing them, organizations can significantly reduce data transfer costs while maintaining secure communication between services, Using GCS bucket storage solution that combines the scalability of cloud storage with the familiar filesystem interface, eliminating the need for expensive persistent volumes. Grafana dashboards complete this optimization framework by offering real-time visibility into resource utilization and costs. This integrated approach not only reduces operational expenses but also enhances system performance and maintainability, demonstrating that well-architected infrastructure can simultaneously improve both cost efficiency and system reliability.

Author : Guru Raghupathy , 01 January 2025